Response Automation

AI-empowered resource to automate answering external security and risk assessment questionnaires.

Prerequisites:

- RegML and Response Automation are both enabled by an administrator.

- The RegML backend and vector database have been deployed to your environment.

- Harvester job has already processed the information selected as sources

- Your RegScale environment is configured correctly to communicate with the other parts of the RegML Infrastructure.

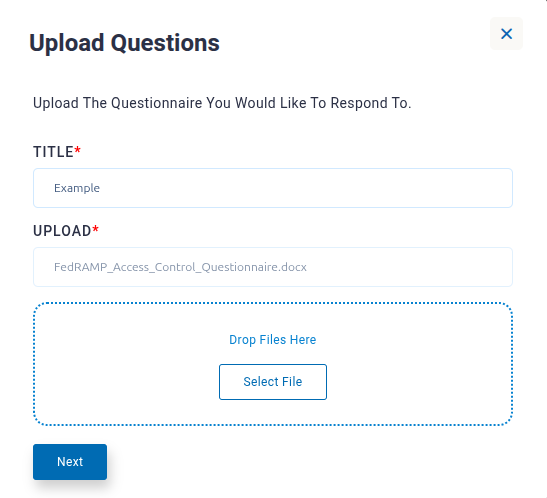

Starting a New Response Automation:

-

Click Response Automation under Modules

-

Click the blue "Start Response" button in the upper right corner.

-

Enter a title to identify your Response Automation entry.

-

Select the document that you wish to extract questions from to be uploaded.

-

Currently supports PDFs (.pdf), Excel sheets (.xls, .xlsx), and Word documents (.docx)

-

-

Click Next

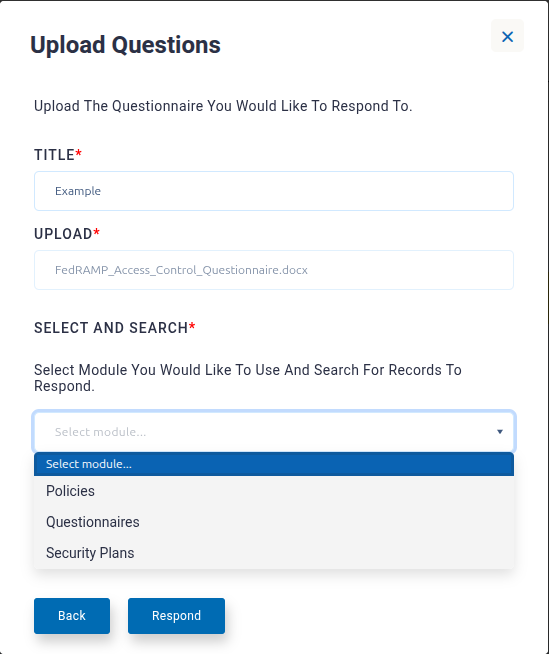

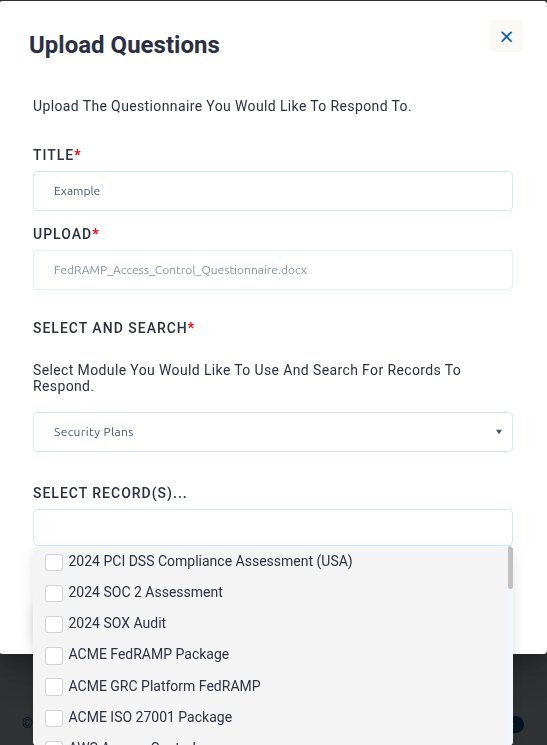

-

Search for the policies, questionnaires, and/or security plans that you would like the language model to search for relevant information, when answering questions.

- Click the Respond button.

- Once the job is completed, its status will change from "In Progress" to "Finished".

Viewing Questions and Answers in the List View

- Once a Response Automation job is Finished, you can drill down into the record and click “Responses” in the upper right menu.

- Note that not every question is guaranteed to have found sufficient citations to answer the question.

- If insufficient context was found for a question the answer will be left blank.

- From the list view of responses and questions, you can select and delete any questions that do not agree belong in the set.

- Clicking the “More Options” (3 dots) menu on the right will allow you to open the Review Responses page.

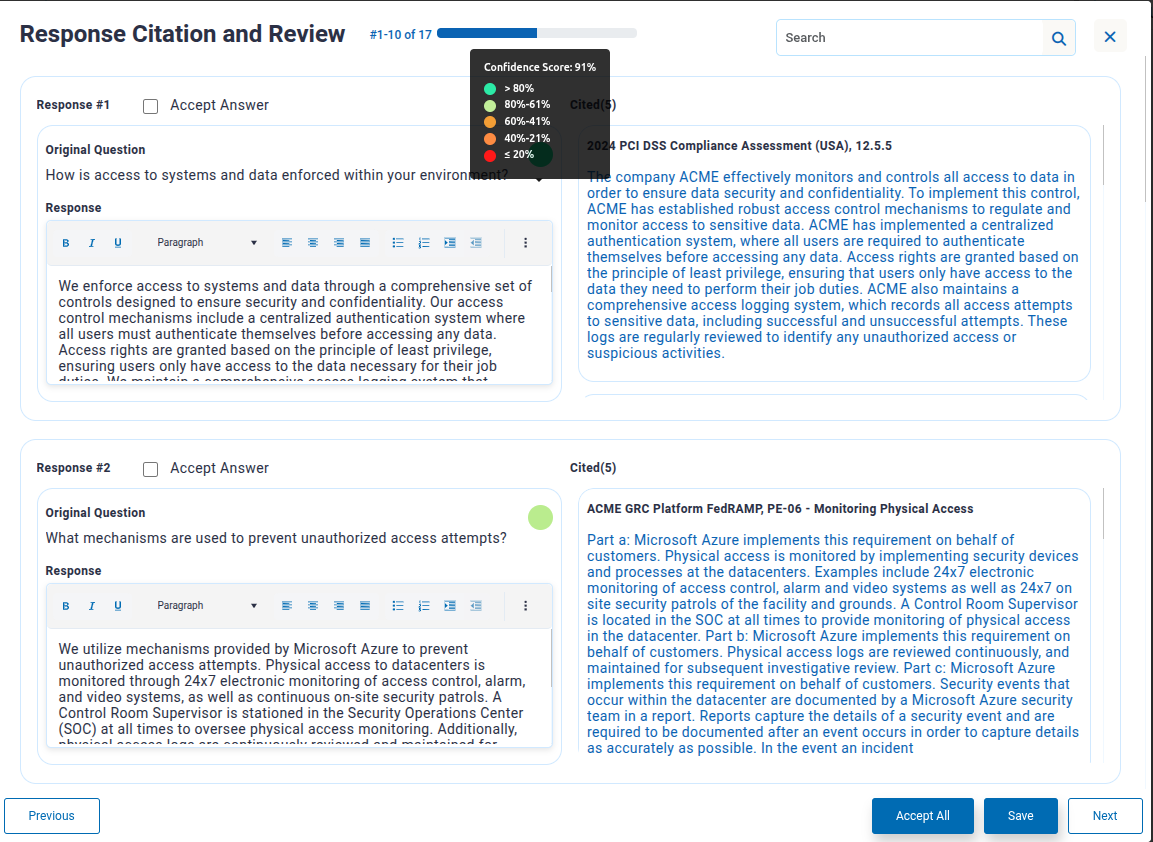

Reviewing the Results of Individual Responses

-

Responses are reviewed in sets of 10. You can paginate through the responses by clicking the Previous or Next buttons at the bottom of the set of records.

-

The citations window to the right of the response shows the excerpts of text from your specified source documents that were used by the language model to answer this question. Take note that, due to processing requirements, these citations are often not neatly divided into complete sentences or paragraphs. A citation may begin or end with an incomplete sentence.

-

The colored indicator in the top right corner of the response pane shows the confidence score for that individual response.

- This score is calculated using a proprietary algorithm based on the quantity and relevance of the citations used by the LLM to generate the response.

- Hovering over the confidence score indicator will show the scale used to grade confidence scores.

-

The WYSIWYG editor allows you to modify any response. Changes to the text must be saved using the Save button on the bottom right of the records review page.

-

The "Accept Answer" checkbox allows you to keep track of which responses you have accepted and approved. Acceptance status is purely for your information, and has no impact on program performance. Recall that overall Acceptance status is reported in aggregate at the List View of the Response Automation details page.

-

The "Accept All" button marks all the records on the current page as accepted. It does not affect records not currently shown on the page.

-

The search bar on the top right will filter displayed records based on the contents of the question or response.

Exporting Results

- When you are satisfied with the work, you may export the resulting questions and answers into an Excel file using the "Export" option of the "More options" menu.

Troubleshooting

- There is an error submitting the job-- This notification means the RegML backend cannot be reached to submit the job. Confirm the necessary infrastructure components are in place and that environment variables are set.

- Response Automation Job Stuck at “In Progress” status – This means the RegML backend is unable to communicate back to RegScale to update the status of the job. Ensure that RegML infrastructure is both present in the environment and that the environment is correctly configured.

- Response Automation incorrectly identified something as a question / Failed to identify a question – Language models sometimes make mistakes in recognizing when text is intended to represent a request for information— especially in cases where the language of the request is ambiguous or not clearly a question. New questions can manually be added to the Response Automation set. Please share any concerns or experiences with your customer service representative, so that we can use this information to improve model performance.

Updated 4 months ago